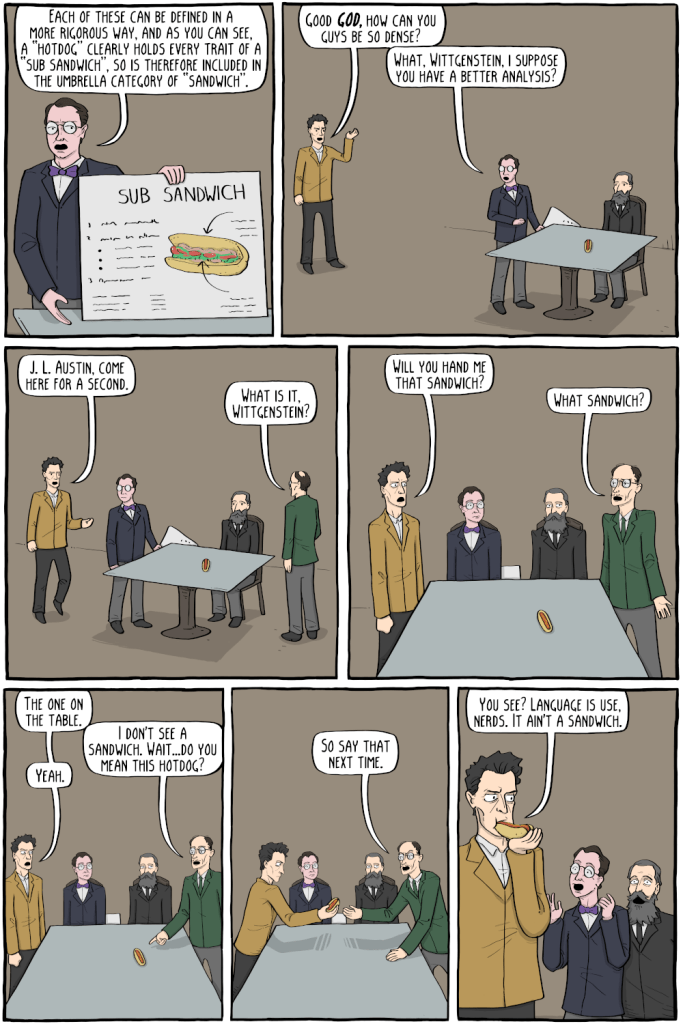

Existential Comics is an extremely nerdy webcomic about philosophers, written and drawn by Corey Mohler, a software engineer(!). My favorite Existential Comics strip is titled Is a Hotdog a Sandwich? A Definitive Study. The topic is… exactly what you would expect:

At the risk of explaining a joke: the punchline is that we can conclude that a hotdog isn’t a sandwich because people don’t generally refer to hotdogs as sandwiches. In Wittgenstein’s view, the meaning of a phrase isn’t determined by a set of formal criteria. Instead, language is use.

In a similar spirit, in his book Designing Engineers, Louis Bucciarelli proposed that we should understand “knowing how something works” to mean knowing how to work it. He begins with an anecdote about telephones:

A few years ago, I attended a national conference on technological literacy… One of the main speakers, a sociologist, presented data he had gathered in the form of responses to a questionnaire. After a detailed statistical analysis, he had concluded that we are a nation of technological illiterates. As an example, he noted how few of us (less than 20 percent) know how our telephone works.

This statement brought me up short. I found my mind drifting and filling with anxiety. Did I know how my telephone works?

Bucciarelli tries to get at what the speaker actually intended by “knowing how a telephone works”.

I squirmed in my seat, doodled some, then asked myself, What does it mean to know how a telephone works? Does it mean knowing how to dial a local or long-distance number? Certainly I knew that much, but this does not seem to be the issue here.

He dives down a level of abstraction into physical implementation details.

No, I suspected the question to be understood at another level, as probing the respondent’s knowledge of what we might call the “physics of the device.”

I called to mind an image of a diaphragm, excited by the pressure variations of speaking, vibrating and driving a coil back and forth within a a magnetic field… If this was what the speaker meant, then he was right: Most of us don’t know how our telephone works.

But then Bucciarelli continues to elaborate this scenario:

Indeed, I wondered, does [the speaker] know how his telephone works? Does he know about the heuristics used to achieve optimum routing for long distance calls? Does he know about the intricacies of the algorithms used for echo and noise suppression? Does he know how a signal is transmitted to and retrieved from a satellite in orbit? Does he know how AT&T, MCI, and the local phone companies are able to use the same network simultaneously? Does he know how many operators are needed to keep this system working, or what those repair people actually do when they climb a telephone pole? Does he know about corporate financing, capital investment strategies, or the role of regulation in the functioning of this expansive and sophisticated communication system?

Does anyone know how their telephone works?

At this point, I couldn’t help thinking of that classic tech interview question, “What happens when you type a URL into address bar of your web browser and hit enter”? It’s a fun question to ask precisely because there are so many different aspects to the overall system that you could potentially dig in on (Do you know how your operating system services keyboard interrupts? How your local Wi-Fi protocol works?). Can anyone really say that they understand everything that happens after hitting enter?

Because no individual possesses this type of comprehensive knowledge of engineered systems, Bucciarelli settles on a definition that relies on active knowledge: knowing-how-it-works as knowing-how-to-use-it.

No, the “knowing how it works” that has meaning and significance is knowing how to do something with the telephone—how to act on it and react to it, how to engage and appropriate the technology according to one’s needs and responsibilities.

I thought of Bucciarelli’s definition while reading Andy Clark’s book Surfing Uncertainty. In Chapter 6, Clark claims that our brain does not need to account for all of its sensory input to build a model of what’s happening in the world. Instead, it relies in simpler models that are sufficient for determining how to act (emphasis mine).

This may well result … in the use of simple models whose power resides precisely in their failing to encode every detail and nuance present in the sensory array. For knowing the world, in the only sense that can matter to an evolved organism, means being able to act in that world: being able to respond quickly and efficiently to salient environmental opportunities.

The through line that connects Wittgenstein, Bucciarelli, and Clark, is the idea of knowledge as an active thing. Knowing implies using and acting. To paraphrase David Woods, knowledge is a verb.