With the value of AI coding tools now firmly established in the software industry, the next frontier is AI SRE tools. There are a number of AI SRE vendors. In some cases, vendors are adding AI SRE functionality to extend their existing product lineup, a quick online search reveals one such as PagerDuty’s SRE Agents, Datadog’s Bits AI SRE, incident.io’s AI SRE, Microsoft’s Azure SRE Agent, and Rootly’s AI SRE. There are also a number of pure play AI SRE startups: the ones I’ve heard of are Cleric, Resolve.ai, Anyshift.io, and RunWhen. My sense of the industry is that AI SRE is currently in the evaluation phase, compared to the coding tools which are in the adoption phase.

What I want to write about today is not so much what these AI tools do contribute to resolving incidents, but rather what they don’t contribute. These tools are focused on diagnostic and mitigation work. The idea is to try to automate as much as possible the work of figuring out what the current problem is, and then resolving it. I think most of the focus is, rightly, on the diagnostic side at this stage, although I’m sure automated resolution is also something being pursued. But what none of these tools try to do, as far as I can tell, is incident management.

The work of incident response always involves a group of engineers: some of them are officially on-call, and others are just jumping in to help. Incident management is the coordination work that helps this ad-hoc team of responders work together effectively to get the diagnostic and remediation work done. Because of this, we often say that incident response is a team sport. Incidents involve some sort of problem with the system as a whole, and because everybody in the organization only has partial knowledge of the whole system, we typically need to pool that knowledge together to make sense of what’s actually happening right now in the system. For example, if a database is currently being overloaded, the folks who own the database could tell you that there’s been a change in query pattern, but they wouldn’t be able to tell you why that change happened. For that, you’d need to talk to the team that owns the system that makes those queries.

Fixation: the single-agent problem

Another reason why we need multiple people responding to incidents is that humans are prone to a problem known as fixation. You might know it by the more colloquial term tunnel vision. A person will look at a problem from a particular perspective, and that can be problematic if the person addressing the problem has a perspective that is not well-matched to solving that problem. You can even see fixation behavior in the current crop of LLM coding tools: they will sometimes keep going down an unproductive path in order to implement a feature or try to resolve an error. While I expect that future coding agents will suffer less from fixation, given that genuinely intelligent humans frequently suffer from this problem, I don’t think that we’ll ever see an individual coding agent get to the point where it completely avoids fixation traps.

One solution to the problem of fixation is to intentionally inject a diversity of perspectives by having multiple individuals attack the problem. In the case of AI coding tools, we deal with the problem of fixation by having a human supervise the work of the coding agent. The human spots when the agent falls down a fixation rabbit hole, and prompts the agent to pursue a different strategy in order to get it back on track. Another way to leverage multiple individuals to is to strategically have them pursue different strategies. For example, in the early oughts, there was a lot of empirical software engineering research into an approach called perspective-based reading for reviewing software artifacts like requirements or design documents. The idea is that you would have multiple reviewers, and you would explicitly assign a reviewer a particular perspective. For example, let’s say you wanted to get a requirements document reviewed. You could have one reviewer read it from the perspective of a user, another from the perspective of a designer, and a third from the perspective of a tester. The idea here is that reading from a different perspective would help identify different kinds of defects in the artifact.

Getting back to incidents, the problem of fixation arises when a responder latches on to one particular hypothesis about what’s wrong with the system, and continues following on that particular line of investigation, even though it doesn’t bear fruit. As discussed above, having responders with a diverse set of perspectives provides a defense against fixation. This may take the form of multiple lines of doing multiple lines of investigation, or even just somebody in the response asking a question like, “How do we know the problem isn’t Y rather than X?”

I’m convinced that an individual AI SRE agent will never be able to escape the problem of fixation, and so that incident response will necessarily involve multiple agents. Yes, there will be some incidents where a single AI agent is sufficient. But incident response is a 100% game: you need to recover from all of them. That means that eventually you’ll need to deploy a team of agents, whether they’re humans, AI, or a mix. And that means incident response will require coordination: in particular, maintaining common ground.

Maintaining common ground is active work

During an incident, many different things are happening at once. There are multiple signals that you need to keep track of, like “what’s the current customer impact?”, “is the problem getting better, worse, or staying the same?”, “what are the current hypotheses?”, “which graphs support or contradict those hypotheses?” The responders will be doing diagnostic work, and they’ll be performing interventions to the system, sometimes to try to mitigate (e.g., “roll back that feature flag that aligns in time”), and other times to support the diagnostic work (e.g., “we need to make a change to figure out if hypothesis X is actually correct.”)

The incident manager helps to maintain common ground: they make sure that everybody is on the same page, by doing things like helping bring people up to speed on what’s currently going on, and ensuring people know which lines of investigation are currently being pursued and who (if anyone) is currently pursuing them.

If a responder is just joining an incident, an AI SRE agent is extremely useful as a summary machine. You can ask it the question, “what’s going on?”, and it can give you a concise summary of the state of play. But this is a passive use case: you prompt it, and it gives a response. But because the state of the world is changing rapidly during the incident, the accuracy of that answer will decay rapidly with time. Keeping the current state of things up to date in the minds of the responders is an active struggle against entropy.

An effective AI incident manager would have to be able to identify what type of coordination help people need, and then provide that assistance. For example, the agent would have to be able to identify when the responders (be they human or agent) were struggling and then proactively take action to assist. It would need a model of the mental models of the responders to know when to act and what to action to take in order to re-establish common ground.

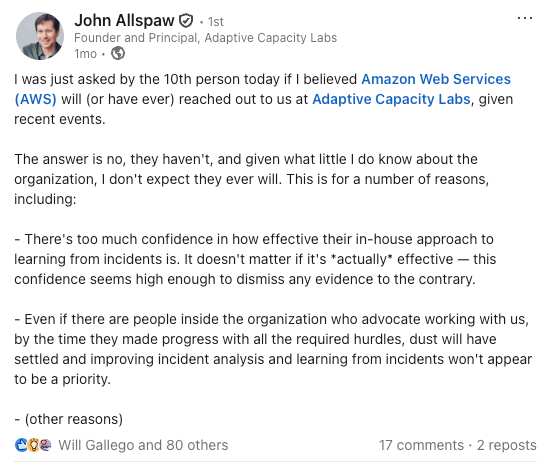

Perhaps there is work in the AI SRE space to automate this sort of coordination work. But if there is, I haven’t heard of it yet. The focus today is on creating individual responder agents. I think these agents will be an effective addition to an incident response team. I’d love it if somebody built an effective incident management AI bot. But it’s a big leap from AI SRE agent to AI incident management agent. And it’s not clear to me how well the coordination problem is understood by vendors today.