There’s a collection of friends that I have a standing videochat with every couple of weeks. We had been meeting at 8am, but several people developed conflicts at that time, including me. I have a teenager that starts school at 8am, and I’m responsible for getting them to school in the morning (I like to leave the house around 7:40am), which prevented me from participating.

As a group, we decided to reschedule the chat to 7am. This works well for me, because I get up at 6. Today was the first day meeting at the new scheduled time. I got up as I normally do, and was sure to be quiet so as not to wake my wife, Stacy; she sleeps later than I do, but she gets up early enough to rouse our kids for school. I even closed the bedroom door so that any noise I made from the videochat wouldn’t disturb her.

I was on the videochat, taking part in the conversation in hushed tones, when I looked over at the time. I saw it was 7:25am, which is about fifteen minutes before I start getting ready to leave the house. Usually, the rest of the household is up, showering, eating breakfast. But I hadn’t heard a peep from anyone. I went upstairs to discover that nobody else had gotten up yet.

It turns out that my typical morning routine was acting as a natural alarm clock for Stacy. My alarm goes off at 6am every weekday, and I get up, but Stacy stays in bed. However, the noises from my normal morning routine are the thing that rouse her, which is typically around 7am. Today, I was careful to be very quiet, and so she didn’t wake up. I didn’t know that I was functioning as an alarm clock for her! That’s why I was careful to be quiet, and why I didn’t even think to mention to her about the new videochat time.

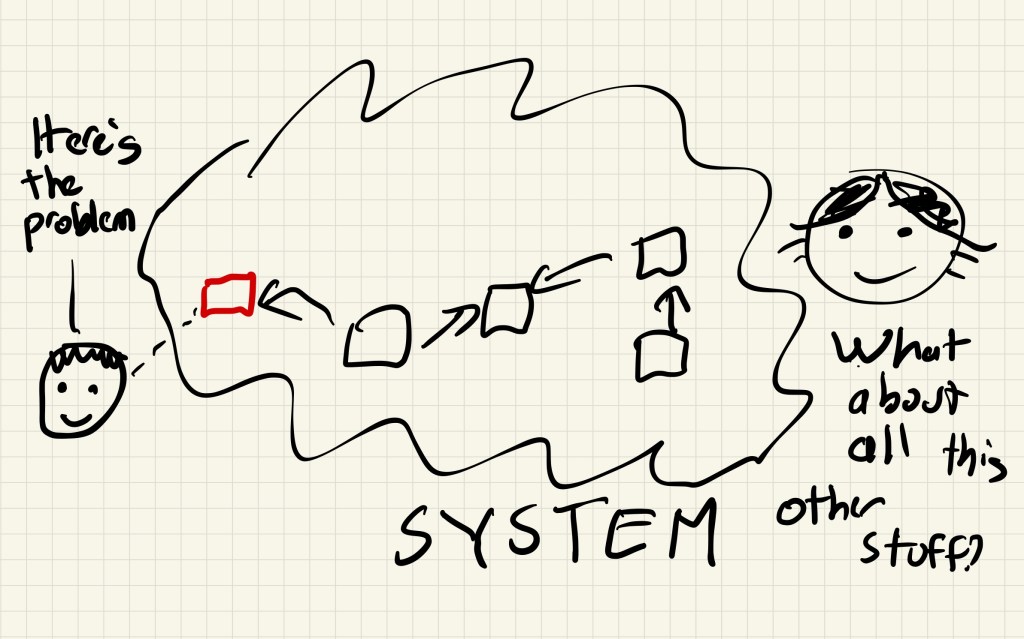

I suspect this is failure mode is more common than we realize: there is a process inside a system, and over time the process comes to fulfill some unintended, ancillary functional role, and there are people who participate in this process that aren’t even aware of this function.

As an example, consider Chaos Monkey. Chaos Monkey’s intended function is to ensure that engineers design their services to withstand a virtual machine instance failing unexpectedly, by increasing their exposure to this failure mode. But Chaos Monkey also has the unintended effect of recycling instances. For teams that deploy very infrequently, their service might exhibit problems with long-lived instances that they never notice because Chaos Monkey tends to terminate instances before they hit those problems. Now imagine declaring an extended period of time where, in the interest of reducing risk(!), no new code is deployed, and Chaos Monkey is disabled.

When you turn something off, you never know what might break. In some cases, nobody in the system knows.