This post is an elaboration of a shorter post I wrote about five years ago.

The two management giants of the mid-twentieth century were Peter Drucker and W. Edwards Deming. Ironically, while Drucker hails from Austria-Hungary (like me, Drucker emigrated to the U.S. as an adult) and Deming was born in the U.S., it was Drucker that proved to be more influential in America. Deming’s influence was much greater in Japan than it ever was the U.S. If you’ve ever been at an organization that uses OKRs, then you have worked in the shadow of Drucker’s legacy. While you can tell a story about how Deming influenced Toyota, and Toyota inspired the lean movement, I would still describe management in the U.S. as Deming in exile. Deming explicitly stated that management by objectives isn’t leadership, and I think you’d be hard-pressed to find managers in American companies who would agree with that sentiment.

Here I want to talk about why I think it is that Drucker’s ideas were stickier than Deming’s in the U.S. It all comes down to the nature of organizations and people.

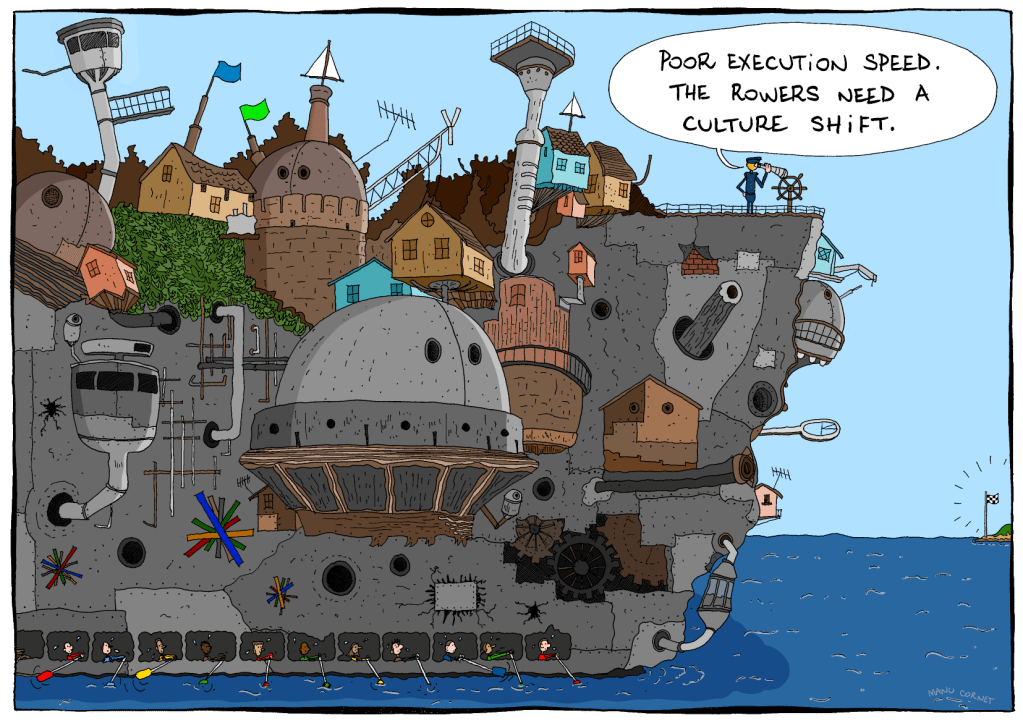

An organization is a big, hairy, complex mess, and the bigger the organization is, the hairier and more complex it gets. Managers, on the other hand, have a very finite amount of bandwidth. There are only so many hours in a day, and this number does not increase with the complexity of an organization. And, let’s face it, they’re spending something close to 100% of that bandwidth attending meetings.

How is a manger to make sense of this mess?

OKRs as a mess-reduction mechanism

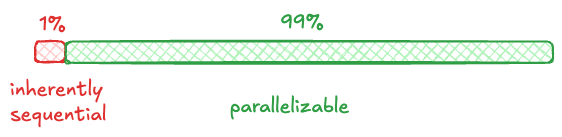

In the Druckerian approach of OKRs, you set a small number of objectives, and then you identify quantifiable key results for each objective that provide a signal about whether progress towards the objective is being made, and then you monitor the key results. Key results reduce the bandwidth required to make sense of what’s happening in the system. Instead of the blooming, buzzing confusion of the entire system, monitoring key results means you can filter out all of that unnecessary detail to focus specifically on the aspects that are relevant to the bandwidth-limited manager. It’s no coincidence that when John Doerr wrote a book on his experience with OKRs at Intel and how he brought them to Google, he titled his book Measure What Matters.

The beauty of a set of key results is that they take the messiness of the system as input and create a neat summary in spreadsheet or slide format as output.

Deming’s critique

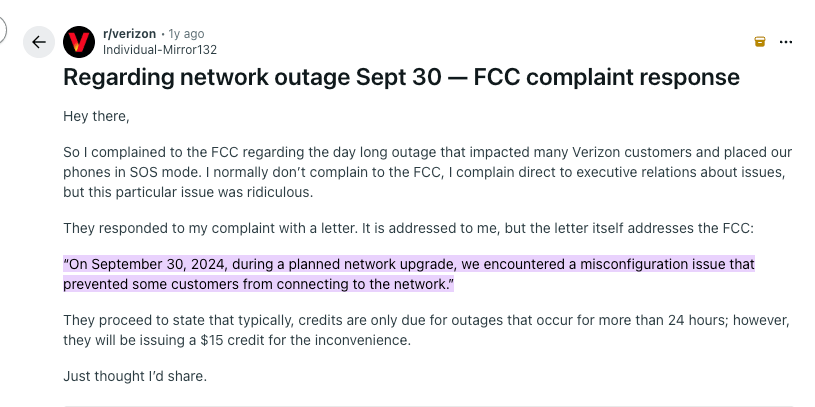

Deming’s approach to the problem of management was radically different from Drucker’s. In his book Out of the Crisis, Deming puts his criticism of the Drucker-ish approach in stark terms. He uses the term deadly disease to describe managing through numerical targets.

Eliminate management by objective. Eliminate management by numbers, numerical goals. Substitute leadership.

Deming’s perspective can be summed up with the old saying: if you don’t change the system, the system doesn’t change. He argued that if you wanted improvements, you had to make systemic changes. Furthermore, you had to understand the system if you wanted to come up with a system improvement that would actually work.

Classical control versus statistical control

Deming was not opposed to the idea of goals: indeed, he was a passionate believer that management should strive to improve quality and productivity, and both of those are goals. He was also not opposed to metrics: he was an advocate of applying Walter Shewhart’s statistical techniques for management. It’s the use of metrics that’s radically different in the two approaches.

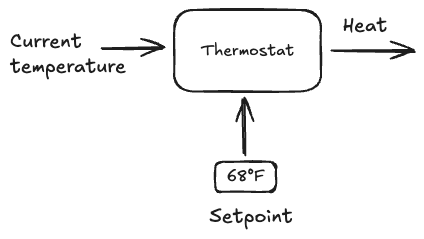

The Drucker-ian approach is akin to a classical control system, like a thermostat. Specifying the key results are like setting the desired temperature (say, 68°F), and then the thermostat generates output in order to bring the current temperature of the room in line with the setpoint.

The idea here is that you give the organization a setpoint, and it will implement the control system that will work to achieve the setpoint.

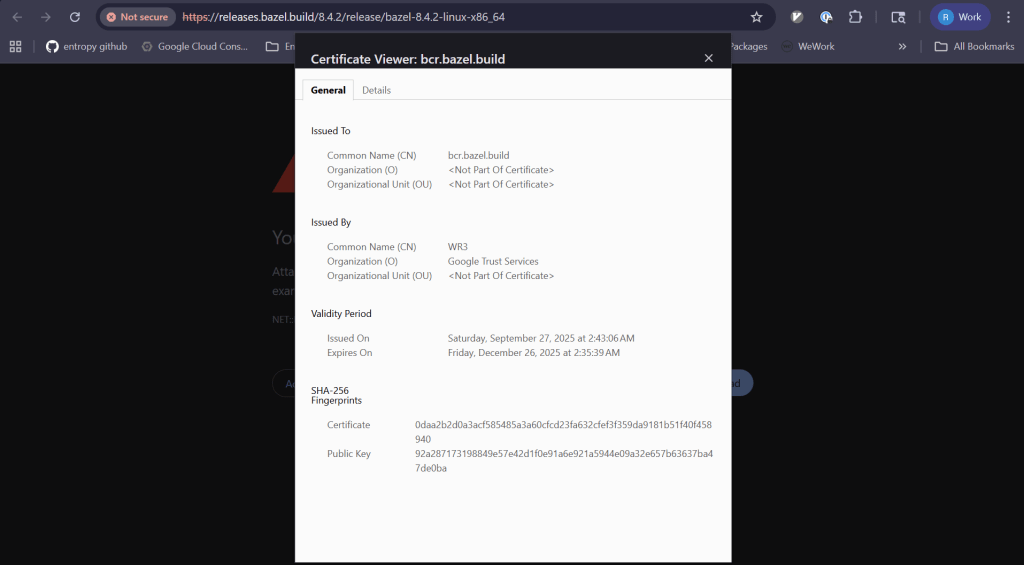

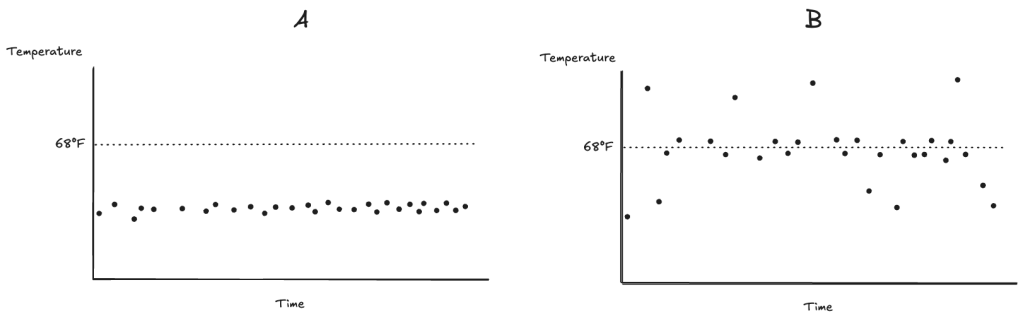

Deming wrote in explicit terms about control, but he meant it in a different sense: we wrote about statistical process control, and statistical process control is about the variability of the output. I’ve written about statistical process control before, but here I’ll touch on the concepts again, with an example. Let’s imagine we are observing the behavior of two brands of thermostats, A and B, each controlling the temperature of a different room. Both thermostats have the same setpoint of 68°F. We observed their behavior by graphing the temperature of the two rooms over time.

Note how the points for thermostat A all fall within a narrow band, whereas for thermostat B there are many more outliers. We’d say that thermostat A is under statistical process control whether thermostat B is not, even though the average temperature for thermostat B is closer to the setpoint then the average temperature for thermostat A. (In practice, you’d draw a control chart to identify whether the system is under statistical control).

Deming argued that you had to understand whether your system was under statistical control in order to determine what intervention to do in order to make an improvement. For example, if your system was out of control, the next intervention would be to do a qualitative investigation into the outliers. On the other hand, if the system was under statistical control, then you’d have to figure out what systemic change to make to improve things.

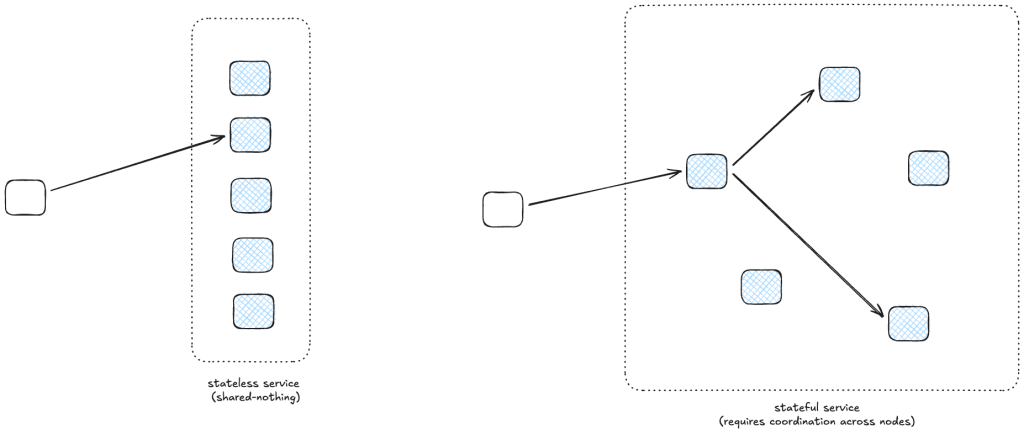

Note how you build a classical control system, whereas you observe whether your system is under statistical process control. Statistical process control is about understanding the system.

Drucker makes a manager’s life easier, Deming makes it harder

One of the virtues of OKRs is that they are straightforward for managers to apply. You set direction by specifying objectives, and you enforce accountability by monitoring key results. Applying Deming’s approach, on the other hand, requires a much greater commitment of management bandwidth. Drucker offers a control mechanism with a bounded amount of information, where Deming requires a never-ending research program, with no upper bound on the kind of information that might be relevant. In fact, the information might even be unobservable. Deming approvingly quotes the statistician Lloyd Nelson, who said:

The most important figures needed for management of any organization are unknown and unknowable.

Myself, I’m in the Deming camp, but I can see why Drucker’s ideas won out. In reliability, we talk about “making the right thing easy and the wrong thing hard”, other people call this The Pit of Success. The rationale is that people will tend to do the easy thing over the hard thing. And managers are people too. But sometimes the right thing to do is the harder one, and nothing can be done about that.